This process of creating an effective prompt is called prompt engineering, and it has been shown that by just changing the prompt, language models performs better! For our use case, we can start with a very simple prompt format. So, in our prompt, we should pass a single tweet after Tweet: prefix and expect the model to predict the sentiment on the next line after Sentiment: prefix. Let’s understand it this way, we want to provide the tweet as the input and want the sentiment as output. Now the next obvious question should be, how can we transform the sentiment detection task as a text generation one? The answer is quite simple, all we have to do is create an intuitive prompt (template with data) that could reflect how a similar representation could occur on the web. For this article, we will pick 117M sized GPT-2, 125M sized GPT-Neo and 220M sized T5. One point to note, each model further releases several versions based on the tunable parameter size. T5 paper showcase that using the complete encoder-decoder architecture (of the transformer) is better than only using the decoder (as done by the GPT series), hence they stay true to the original transformer architecture.Ī brief comparison of the different models is shown below.

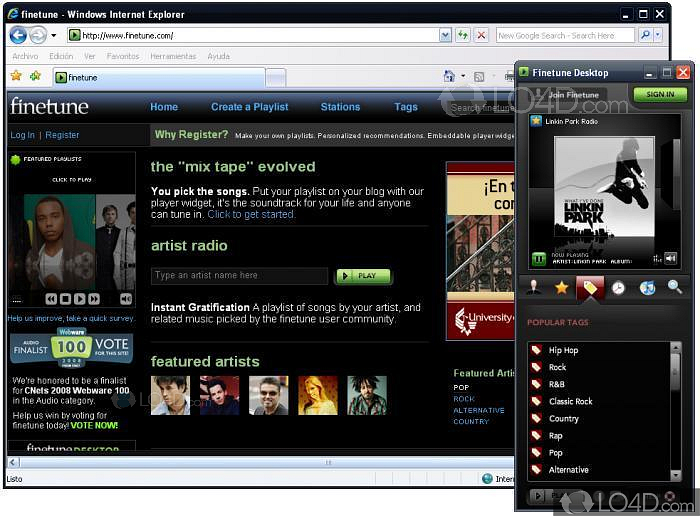

Finetune for firefox series#

In fact, this series of GPT models made the language model famous! GPT stands for “Generative Pre-trained Transformer”, and currently we have 3 versions of the model (v1, v2 and v3). GPT-2: It is the second iteration of the original series of language models released by OpenAI.In this article, we will be concerned about the following models, This is very intuitively shown by T5 authors, where the same model can be used to do language translation, text regression, summarization, etc. The recent surge in interest in this field is due to two main reasons, (1) the availability of several high performance pre-trained models, and (2) it's very easy to transform a large variety of NLP based tasks into the text-in text-out type of problem. They are called language models, as they can be used to predict the next word based on the previous sentences. Usually, we apply some form of the Sequence-to-Sequence model for this task. Text generation is an interesting task in NLP, where the intention is to generate text when provided with some prompt as input. Let's get started! Text generation models We will also compare their performance by fine-tuning on Twitter Sentiment detection dataset. This is called fine-tuning, and in this article, we will practically learn the ways to fine-tune some of the best (read state-of-the-art) language models currently available. If the audiences (including you and me) were not impressed with their tunable parameter’s size going into billions, we were enthralled by the ease with which they can be used for a completely new unseen task, and without training for a single epoch! While this is okay for quick experiments, for any real production deployment, it is still recommended to further train the models for the specific task. Recent researches in NLP led to the release of multiple massive-sized pre-trained text generation models like GPT- and T5. You can also connect with me on LinkedIn. For more such articles visit my website or have a look at my latest short book on Data science. To read more about text generation models, see this.

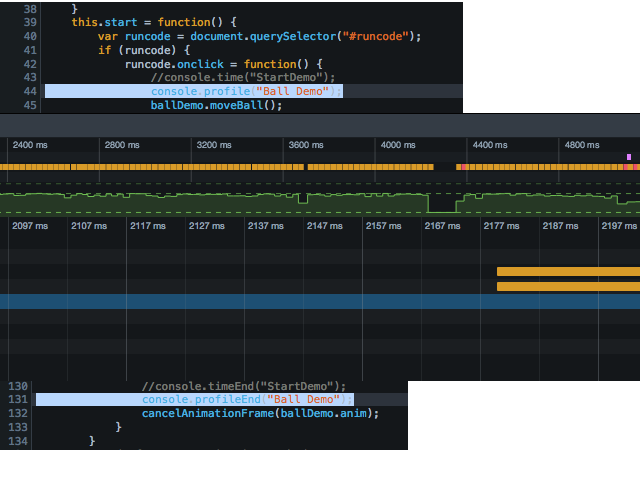

Finetune for firefox code#

The code used in this article can be found here - GPT and T5.

0 kommentar(er)

0 kommentar(er)